Approach & Method

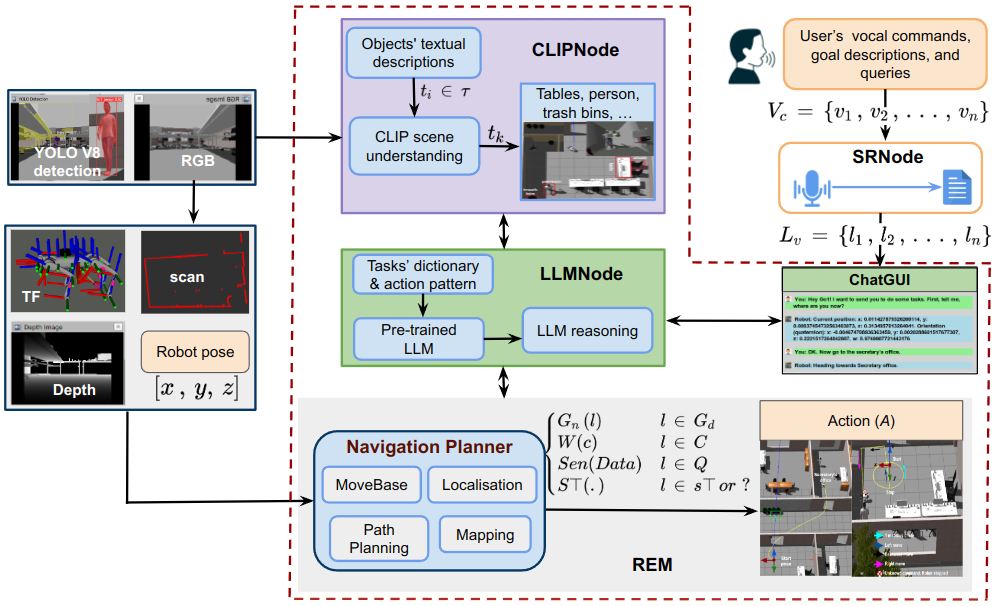

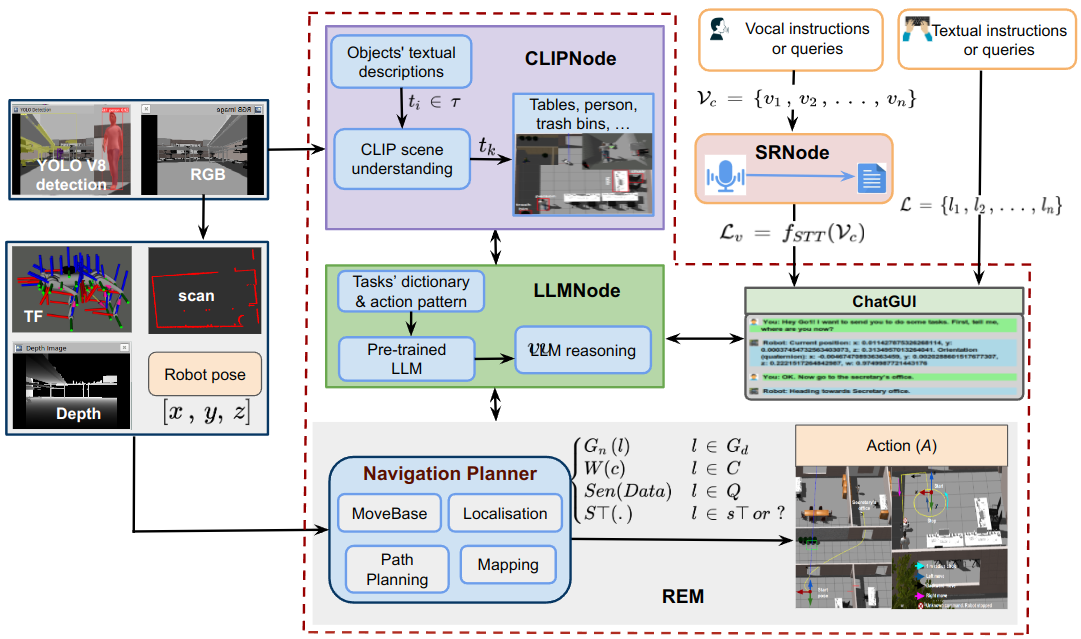

Architectural Overview of the framework

LLMNode decodes the textual-based natural language conversations.

CLIPNode provides a visual and semantic understanding of the robot's task environment.

REM node abstracts the high-level understanding from the LLMNode to the actual physical robot's actions.

ChatGUI serves as the user’s primary textual-based interaction point.

SRNode decodes the vocal or auditory natural language conversations from humans.