Multimodal Human-Autonomous Agents Interaction Using Pre-Trained Language and Visual Foundation Models

Abstract

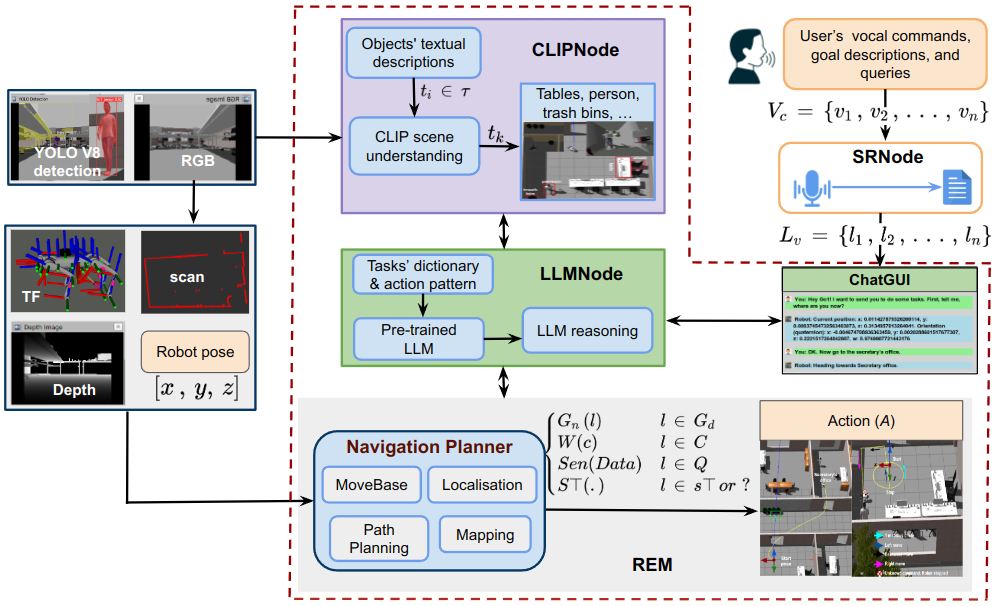

In this paper, we extended the method proposed in [project] to enable humans to interact naturally with autonomous agents through vocal and textual conversations. Our extended method exploits the inherent capabilities of pre-trained large language models (LLMs), multimodal visual language models (VLMs), and speech recognition (SR) models to decode the high-level natural language conversations and semantic understanding of the robot's task environment, and abstract them to the robot's actionable commands or queries. We performed a quantitative evaluation of our framework's natural vocal conversation understanding with participants from different racial backgrounds and English language accents. The participants interacted with the robot using both vocal and textual instructional commands. Based on the logged interaction data, our framework achieved 87.55% vocal commands decoding accuracy, 86.27% commands execution success, and an average latency of 0.89 seconds from receiving the participants' vocal chat commands to initiating the robot’s actual physical action.

Experiments

Real World Experiments with Segway RMP Lite 220 Mobile Robot

Simulation Experiments with Unitree Go1 Quadruped Robot

Citation

If you use this work in your research, please cite it using the following BibTeX entry:

@misc{nwankwo2024multimodal,

title={Multimodal Human-Autonomous Agents Interaction Using Pre-Trained Language and Visual Foundation Models},

author={Linus Nwankwo and Elmar Rueckert},

year={2024},

eprint={2403.12273},

archivePrefix={arXiv},

primaryClass={cs.RO}

}